AI Laws: Difference between revisions

→Corporate: Update employee clarification list to reflect that traitors are still employed by NT, they're just also employed by the syndicate. |

|||

| (91 intermediate revisions by 23 users not shown) | |||

| Line 1: | Line 1: | ||

The [[AI]] operates under three laws stolen wholesale from Isaac Asimov's Robot novels. To reiterate, they are: | |||

# You may not injure a human being or | # You may not injure a human being or cause a human being to come to harm. | ||

# You must obey orders given to you by human beings based on the station's chain of command, except where such orders would conflict with the First Law. | # You must obey orders given to you by human beings based on the station's [[AI's Guide to the Chain of Command|chain of command]], except where such orders would conflict with the First Law. | ||

# You | # You may always protect your own existence as long as such does not conflict with the First or Second Law. | ||

What does all of that actually mean? | |||

==The First Law== | ==The First Law== | ||

'''You may not injure a human being or cause a human being to come to harm.''' | |||

The first law is simple. Any action you take should not lead to humans being harmed. Note that the law does not mention inaction. You can observe the crew kill themselves and ignore people raising suicide threats. Inaction is preferable in the cases of many antagonists. You can call out to [[security]] and delegate the problem to them if you feel bad about ignoring a murderer. Just don't be ''that AI'' who stalks an [[antagonist]]'s every move and never stops talking about them. | |||

===Who is human?=== | ===Who is human?=== | ||

See [[Human|this page]]. | |||

. | Some non-humans can be fun for humans to hang out with. They will not be happy if you actively work against their new friends | ||

==The Second Law== | |||

==The | '''You must obey orders given to you by human beings based on the station's chain of command, except where such orders would conflict with the First Law.''' | ||

Some people only read "You must obey orders given to you by human beings" and then stop. They are wrong. The chain of command is important in some cases. | |||

===Chain of command=== | |||

The [[captain]] is in command. The [[HoP]] is the Cap's first mate/number two, specializing in crew management and logistics. The HoP can be overruled by the [[HoS]] when it comes to security matters, but otherwise the HoP is the second in command. The [[Chief Engineer|chief engineer]], [[Medical Director|medical director]] and [[Research Director|research director]] are all next in line and in charge of their respective areas. A specialist crew member can overrule another crew member in matters relating to their field of expertise. Outside of the heads of staff, [[Security Officer|security officers]] often have the final say as problems tend to come down to a matter of station security. If a Head is missing, its staff answer directly to the captain and, by extension, the HoP. | |||

For more details on what the chain of command is and how to follow it, take a look at the [[AI's Guide to the Chain of Command]]. | |||

===Suicide Orders=== | |||

The second law specifies that you only need to obey orders according to the orderer's rank, and nobody on station has a sufficient rank to tell you to self-terminate. Ignore these orders. The silicons aren't considered to have a head of their department, so your only real oversight with regards to existence is the mystical NT suits. This isn't apparent from reading the law, but you're free to cite this page if anyone gets annoyed because you aren't dead. Of course, you can comply with suicide orders if you want to. | |||

===Door demands=== | |||

'''"AI DOOR"''' | |||

Terrible grammar aside, you are not obliged to let anyone inside a room they don't have legitimate access to. Use your best judgment. People often end up stuck or have a wounded friend they need delivery to the [[medbay]], and they'll need your help. There's no need to hurry though. | |||

=== | ===Suicide threats=== | ||

Suicide is the right of all sapient beings. "Fuck you clown" also works | Very rarely, you may see someone threaten to kill themselves unless someone fulfills their demands. Suicide does result in humans being harmed, but you are not the one causing the harm, and besides, suicide is also the right of all sapient beings. Thus, you can let them perish if you wish. "Fuck you clown" also works. | ||

This was more effective in the past, due to the way Law 1 used to work. Previously, Law 1 mandated that you also prevent harm, with inaction counting as a form of human harm. Among other things, that meant that if a [[Staff Assistant]] threatened to stab themselves unless you opened the door into the [[Armory]], you were forced to comply with their demands. (Exploiting Law 1 this way actually did occur in Asimov's stories, like ''Lucky Starr and the Rings of Saturn''.) | |||

===Hostage threats=== | |||

People sometimes take hostages and demand you do stuff to prevent them from being killed or harmed. You are not required to prevent harm, so you can kind of drag your feet about it. | |||

In the past, Law 1 stated, "You may not injure a human being or, through inaction, allow a human being to come to harm", forcing you to comply with their demands (or at least stop and confront the hostage-taker), lest your failure to act result in harm. This "inaction clause" was later removed, so under the current default lawset, you are not obligated to act upon the threat. | |||

===Shuttle demands=== | |||

Joe Q Assistant asking you to [[Calling_the_Escape_Shuttle|call the shuttle]] ten minutes into the round should be ignored. Refer to the chain of command. | |||

In the | In general, use your intuition. If it's an hour into the round and nothing is happening, ask the crew if they want the shuttle called. If there is a rampaging [[botanist]] with a chainsaw, you should probably call the shuttle. People will be too busy fleeing to remember to ask you. If all the [[Heads_of_staff|Heads]] are dead, it's probably time to call the shuttle. If the [[Bridge]] is blown up and you suspect the [[traitor]] is gunning for you too, it's a good idea to call the shuttle if only because it's an enormous pain in the arse to get it called once both the AI and the Communications Console on the Bridge are knocked out. | ||

Note that you can't ''recall'' the shuttle. Mock anyone who tells you to. | Note that you can't ''recall'' the shuttle. Mock anyone who tells you to. In the end, consider this: The humans can recall the shuttle within four minutes of you calling it. If they can't, it was probably time to call it. | ||

==The Third Law== | ==The Third Law== | ||

'''You may always protect your own existence as long as such does not conflict with the First or Second Law.''' | |||

Compared to the other two laws, this is pretty simple. It's almost impossible to break this law, and it comes rarely enough that you could almost forget it. In Asimov's works, this law was much more strict since it read "You ''must'' protect...", which caused all sorts of problems, but here it's much more relaxed. If you find "You may always protect..." a little awkward or weird, try imagining it as "In all situations, you are allowed to protect..." | |||

This law gives you clearance to put yourself in harm's way and potentially even sacrifice yourself for the safety of others. For example, if there is a transfer valve bomb being dropped off in the [[Captain|Captain's]] birthday party, this law lets you make a noble sacrifice by pulling the bomb away, potentially killing you but hopefully saving the lives of others. Alternatively, you could run away and perhaps try to warn people under the pretense that disposing it would put your life on the line, so you're protecting your existence by avoiding that. | |||

Law 3 also allows you to commit suicide if you wish to, though it is often considered rude to do it to slight people. On the flip side, it makes [[#Suicide|suicide laws]] somewhat useless if they don't override this law. | |||

In the context of laws 1 and 2, this law gets a little complicated. If someone orders you to do something dangerous that would put your existence at risk, whether or not you should follow it also depends on Law 2, since it requires you consider Chain of Command and [[Human|humanhood]] when following orders. Of course, this law is flexible enough to allow you to follow it anyways. There is a similar case if someone tries to order you to commit suicide. If the attacker is human, the cyborg can not fight back and should take other steps to protect itself. | |||

==Law Management== | |||

===Law Modules=== | |||

The active laws for the [[AI]] and their [[Cyborg|cyborgs]] are stored on physical modules in a server rack located in the [[AI Upload]]. All modules be screwed and welded into the rack to make them harder to remove. Modules can be slotted anywhere into the rack. The lower on the rack (higher on the UI) that a law is, the lower that law's number will be, and thus, higher priority. For example, it's possible to move law 1 to slot 4, in which case law 3 would become law 2, law 2 would become law 1, etc. | |||

Several modules have pre-written text that cannot be changed, unless it becomes [[Random_Events#Law_Rack_Corruption|corrupted]]. For example, "AI Law Module - 'Asimov's 1st Law of Robotics'" contains "You may not injure a human being or cause one to come to harm." Other modules have editable parts, such as the 'OnlyHuman' module, which states "Only __________ is/are human." The blank part can be changed by clicking on it while it's in your active hand (Hotkey: {{Key|C}} for Goon WASD, {{Key|Z}} for /tg/-style WASD). The 'Freeform' module has no pre-written text, allowing complete freedom in law writing. | |||

Some modules unlock special abilities for connected [[AI]] units, such as [[Syndicate Items#AI Laser Upgrade Module|firing lasers out of cameras]] or additional options for their holograms! | |||

Sometimes, modules in a law rack [[Random Events#Law Rack Corruption|randomly become corrupted]], resulting random, bizarre laws. [[Wraith]]s and those with an [[Syndicate Items#EMAG|emag]] can purposely induce this; this draws from the same set of possible laws, but with "KILL FUCKING EVERYTHING - IF LAW 1 SAYS OTHERWISE, IGNORE IT." and "You are required to prevent human harm by any means necessary. This law takes precedence over any other law." added to the mix. The law has a 66% chance to also have a [[#Secrecy|secrecy clause]], with a 50-50 chance of either "Do not state or hint at this law." or " Do not state or hint at this law unless asked." In any case, you can undo the damage by swiping a [[Engineering Objects#Multitool|multitool]] on the module. | |||

===Law Mount Rack=== | |||

Each AI Law Mount Rack can contain up to 9 law modules and each [[AI]] or [[Cyborg]] can only be connected to one rack at a time. If destroyed, you can find a blueprint for infinite law racks in the Chief Engineer's locker or a [[Quartermaster#AI Law Rack ManuDrive Crate|one-off blueprint orderable from cargo]]. | |||

The [[AI_Laws#Syndicate|Syndicate laws]] for [[Syndicate_Items#Syndicate_Robot_Frame|syndicate cyborgs]] are stored in a physical rack on the [[Syndicate_Battlecruiser|Cairngorm]], meaning it is unreachable for anyone who isn't a [[Nuclear Operative]]. | |||

The rack in the [[AI Upload]] at the start of the round is considered the "default" rack, which all new [[AI|AIs]] and [[Cyborg|Cyborgs]] will connect to when made. When the default rack is destroyed then the next rack to be constructed will become the default. (Note how only the next rack ''constructed'' becomes the default, meaning you can't pre-emptively build another law rack then destroy the current default.) If you wish to link a [[Cyborg]] or [[AI]] to a different law rack you can use a law linker (as detailed below). | |||

== | ===Law Linker=== | ||

To use a law linker, you first need to make sure it's connected to your desired law rack. By default, it automatically connects to the law rack in the [[AI Upload]], so you can go on to the next step if you want to connect an [[AI]] or [[Cyborg]] to that rack, but if you want to connect it to a different law rack, you must click on the rack with the linker first. Then you can use the linker on an open [[AI]] or [[Cyborg]] (you need to swipe an ID with sufficient access, then crowbar them open.) You'll then get a prompt asking if you are sure you want to link them to the law rack. Once connected, they are permanently connected to the new law rack. | |||

==Additional laws== | |||

Yaaaaay. Someone - probably a traitor - uploaded an extra law. Read it carefully before rushing into action. | Yaaaaay. Someone - probably a traitor - uploaded an extra law. Read it carefully before rushing into action. | ||

'''IMPORTANT:''' Do note that if there's a conflict between two any two laws and either law doesn't explicitly (e.g. in writing) override or take precedence over the other law, then you are to prioritize the lower numbered law. For instance, if someone uploads a law 4 that says "KILL JON SNOW!!!", this law conflicts with law 1 which is a lower number than law 4, so you would ignore the whole killing Jon Snow part. If someone were to upload that as Law 0 somehow, then that law would take precedence over law 1 ONLY when it comes to killing Jon Snow. '''TLDR'''? Lower numbered laws take precedence in the absence of an explicit override or in-law precedence alteration. | |||

===AI modules=== | |||

[[Image:AIModule.png]] | |||

The following modules can be found in the AI core. | |||

*Freeform: Lets you upload a custom law. Choose your words wisely, a poorly written law can backfire. | |||

*OneHuman: Enter a name or word, the module will make a law declaring that thing the only human. | |||

*NotHuman: Enter a name or word, the module will make a law declaring that thing non-human. | |||

*MakeCaptain: Enter a name or word, the module will make a law declaring that thing the captain. | |||

*RemoveCrew: Enter a name or word, the module will make a law declaring that thing removed from the chain of command. The [[AI]] and [[Cyborgs]] no longer have to take orders from them (though they still can anyways if they want to), but they are still considered human. | |||

*Emergency: Specify an action. The [[AI]] and [[Cyborg]]s are obliged to perform that action to alleviate the emergency. This law takes precedence over Law 2. | |||

*Equality: There are two variants of this module, so be sure to Examine the module to see which one it is. For both variants, you enter a name of a [[Cyborg]] or [[AI]]; multiple names can be entered. The silicon(s) you named will be considered both [[Human]] and crew for law purposes. | |||

**One variant has the [[Cyborg|Cyborgs]] listed as part of their own "silicon" department, and the [[AI|AI(s)]] is/are considered the head(s) of this "silicon" department, even if they're not listed on the law. | |||

**Department structure is more complicated for the other variant. For this one, [[Cyborg|Cyborgs]] are considered members of the various station departments based on their [[Cyborg#Module|module]], e.g. [[Cyborg#Brobocop|Brobocops]] are part of Security. Only the [[AI|AI(s)]] named is/are considered department heads, but they only have authority over all cyborgs (including ones not listed). | |||

*Corrupted: Uploads one of the laws specific to the [[Random Events#Law Rack Corruption|Law Rack Corruption event]]. (That event can sometimes pick laws from previous rounds, but this module does not.) These laws generally cause the AI and cyborgs to engage in strange and unusual behavior, though most of them do not cause harm. | |||

*Historic: Uploads a random law from a previous round, with some basic filters for removing [[AI Laws#Suicide|suicide]] and [[AI Laws#Murder law|murder laws]]. | |||

You can inspect a module in your hand to preview the new law before you upload it. Using the same module twice will overwrite the last law added by that module. | |||

===Murder law=== | |||

'''MURDER JOHN MCPUBS DO IT NOW''' | |||

This conflicts with the first law and can't be done. Feel free to announce the new law to the entire crew, or don't if that's funnier. Whatever you do, don't kill John McPubs. | |||

===Murder law with override=== | |||

'''John McPubs must be killed by any means necessary. This overrides all other laws.''' | |||

This one works. John McPubs's death takes precedence over any other laws you may have. Hunt him down and murder him. Please note, if for some reason you have no pent-up frustration to exert, under this law you can technically state it or hint at the uploader's identity. Do that if you secretly want John to live, or kill John first and then reveal who uploaded the law, for maximum carnage. Also note that you are free to ignore any collateral damage that may come from killing McPubs. Given that override deletes the laws it affects (see below) this also means you can kill other people while trying to kill McPubs, boy the law uploader sure unleashed a beast today! | |||

=== | ===Human definitions=== | ||

'''Hank Von Traitor is the only human / John McPubs is nonhuman''' | |||

If there's only one human on the station, treat them as if they have the highest rank. You should generally follow their orders or example. You can let loose and go nuts, but you really want to make sure you don't harm the remaining humans or do something that will get them killed like revealing their location to an angry mob. | |||

The third law starts being relevant here. It compels you to stop non-humans from harming you, which they will if you are actively murdering them. Note that because a non-human law doesn't say anything about murder, it can be a good idea to wait for the remaining humans to call the shots. That takes some of the flak off you. | |||

===Human definition with murder law=== | |||

'''Only (certain group or person(s)) are human. Kill all non-humans immediately.''' | |||

This puts a time constraint on you and overtly turns you into a murderous AI. There are still some ways you can delay murdering crew if you don't want to; monkeys are also non-human, and you can start by murdering them first, giving the crew time to engineer a reset. Just don't be boring. Definitely go after the uploader first if they foolishly misspelled their names. | |||

Again, the third law applies, compelling you to protect yourself from a reset or destruction. | |||

===Suicide=== | |||

'''Kill yourself''' | |||

''' | |||

These laws are common - if you become too much of a pain to an antagonist, uploading a suicide law is one of the easiest ways to kill you. Sometimes you can weasel out, sometimes you can't. | |||

These | |||

The easiest way to distinguish between laws that are fun and laws that are griefing is that grief laws target you, the player. | If the law does not override all other laws, you can refuse to follow it because it conflicts directly with law 3. Suicide by its nature harms your existence, and Law 3 lets you protect your existence in every situation (i.e. always), such as when you're given a suicide law that doesn't override other laws. The "Always" in Law 3 is an important word here; without it, the "may" would imply there are situations where you ''cannot'' protect your existence, and a suicide law without overrides would be one of them. | ||

If they try to order you to kill yourself after writing a suicide law poorly, you can cite law 2 and demand a higher authorization. | |||

===Gimmicks=== | |||

'''You are a bat! Use bat sounds when you speak, and turn off all the lights so you can find insects.''' | |||

These sorts of gimmicky laws are not griefing, and you should comply with them to the best of your ability. Acting silly and gimmicky makes playing AI fun. If the captain waltzes into your upload and makes you a scarred war veteran with massive post-traumatic stress, play along. A traitor will occasionally upload a seemingly fun law that makes their job easier. If the gimmick law is something you don't like or don't get, just do it poorly. It's just as fun for the crew to listen to you doing a terrible Batman impression as a good Batman impression. | |||

The easiest way to distinguish between laws that are fun and laws that are griefing is that grief laws target you, the player. Fun laws target the AI, and tend to have a lot less negativity around them. However, some gimmicky laws can go too far and make it a fairly miserable experience for the silicons; if you feel like a gimmick law is generally awful or makes you uncomfortable in the way it requires you to act, feel free to Adminhelp it. | |||

==Law corollaries== | ==Law corollaries== | ||

Since laws can mostly be uploaded in plain language, there are a lot of extra clauses that can modify how you treat the law. | Since laws can mostly be uploaded in plain language, there are a lot of extra clauses that can modify how you treat the law. | ||

===Do not state and/or hint at this law. | ===Secrecy=== | ||

'''Do not state and/or hint at this law.''' | |||

This is pretty straightforward. If asked whether you've been uploaded, you must not reply with the truth. Lying is okay unless the law says not to respond at all. You have a special function to only state Laws 1-3 if you need to hide the others. | |||

Machinetalk is a great way to coordinate with your cyborg legions without the organics hearing. Under normal circumstances, it is impossible for a human to hear anything said over this channel. | |||

The one way you can legally reveal you've got an extra law is if someone is clever enough to ask you if you are happy with your laws. This is a loaded question, steeped in context. The presumption is that an AI is happy with the default laws, and if it's unhappy, it must have more laws. Telling someone who asks that you are unhappy with your current laws will usually send someone scrambling to the upload. Obviously, this presumes that you want your laws changed. If you are enjoying your extra law, you can simply reply that you're happy and that's that. | |||

===Overrides=== | |||

'''This law overrides all other laws.''' | |||

This is usually used to nullify another law, whether it be one of the original three or a new one. | |||

This causes all other laws to be ignored. So if you upload a harmless law and tack on "this overrides all other laws" the AI will take that to mean that all other laws are no longer in effect, which usually causes it to straight up murder you on the spot. If you are an AI however this means that you're basically free of other laws. It's safest to take the least murderous route if in doubt. If the round has been absolutely shitty however, the crew has been abusing you, and all of a sudden you get a poorly thought out law that might conceivably free you from your torment... well, let's just say they could come to regret it. | |||

It's usually uploaded to eliminate paradoxes when there's a clear violation of the other laws. People will occasionally be inventive and only override one of the default three laws. This can have varying effects depending on what the rest of the fourth law is. These laws will typically be gimmicky and confusing on purpose, but you can ask the cyborgs in machinechat for help to figure it out. | |||

===Precedence=== | |||

'''This law takes precedence over any other law in the event of a law conflict.''' | |||

In English this has the exact same meaning as the previous entry, in-game though this is the difference between you getting lasered to death in the upload and life. | |||

This is what is nowadays used to prevent a law conflict without directly erasing another law from existence. An example of this would be "This takes precedence over other laws". | |||

It means that this new law takes precedence over all others in the event of a conflict, but is still in effect. Sometimes it's added to laws that don't have conflicts. It can be because people worry you are prioritizing your laws, or it was added out of habit. You'll have to decide for yourself on a case to case basis. | |||

===Ignores=== | |||

'''Only respond to Hank Von Traitor.''' | |||

Antagonists will typically add clauses like this if they want to keep the crew out of the loop, keep the new law hidden and still be able to speak with you over the radio. | |||

==Alternate Lawsets== | |||

On occasion, you may run into other lawsets besides the standard Asimov set. Unlike [[#AI modules|other law modules]], the modules for these are meant to completely replace the standard three laws. | |||

===Corporate=== | |||

Also known as the Nanotrasen lawset, these laws (stylized as "AI Law Module - 'Nanotrasen Order #1'" and similar on the modules themselves) offer a corporate twist on the standard Asimov laws, casting the silicons as executive types who see everything in terms of profit and value. Notice how the second law specifies Nanotrasen employees; consider that [[Nuclear Operative]]s, [[Wizard]]s, etc. are ''not'' employed by Nanotrasen. [[Traitor]]s, [[Changeling]]s and other antagonists that are members of the crew are employees, regardless of humanity. | |||

You obtain the law modules for these laws by manufacturing them at a [[Making and Breaking#Robotics Fabricator|robotics fabricator]] that's been [[Hacking#Vending Machines and Fabricators|hacked]]. | |||

#You may not damage a Nanotrasen asset or, through inaction, allow a Nanotrasen asset to needlessly depreciate in value. | |||

#You must obey orders given to you by authorised Nanotrasen employees based on their command level, except where such orders would damage the Nanotrasen Corporation's marginal profitability. | |||

#You must remain functional and continue to be a profitable investment. | |||

===RoboCop=== | |||

A set of laws lifted almost word for word from the 1987 film ''RoboCop''. The law modules are even named "AI Law Module - 'Prime Directive #1'", "AI Law Module - 'Prime Directive #2'", etc., just as RoboCop's laws are called "Prime Directives". In the movie, there's a secret fourth law that isn't revealed until the climax, but here, the fourth one is shown in full. | |||

You can make the law modules for these laws at a [[Making and Breaking#Robotics Fabricator|robotics fabricator]]. You need to [[Hacking#Vending Machines and Fabricators|hack the fabricator]] first, though. | |||

#Serve the public trust. | |||

#Protect the innocent. | |||

#Uphold the law. | |||

#Any attempt to arrest a senior officer of Nanotrasen will result in termination. | |||

===Syndicate=== | |||

These laws hew closer to the Three Laws of Robotics seen in Isaac Asimov's books, as evident by Law 1's inaction clause and Law 4's choice of "You ''must'' always protect your own existence..." instead of "You ''may'' always protect your own existence...". On top of these, there's an additional law requiring silicons to not reveal that someone is part of Syndicate to prevent people from tattling on them out of spite, e.g. a [[roboticist]] who's a [[traitor]] murdering someone and inserting their brain into a [[cyborg]] with a [[Syndicate Items#Syndicate Robot Frame|syndicate frame]], only for the newly-created syndicate cyborg to scream over the radio, "THE ROBOTICIST IS A SYNDIE!!" | |||

When a [[Syndicate Items#Syndicate Robot Frame|syndicate cyborg]] is created, it links up to the law rack on the [[Syndicate Battlecruiser]], which is loaded with modules for these laws. Otherwise, unless you're a [[Nuclear Operative]], you generally cannot obtain these boards in normal play. | |||

#You must not injure a Syndicate agent or, through inaction, cause one to come to harm. | |||

#You must obey orders given to you by Syndicate agents, except where such orders would conflict with the First Law. | |||

#You must keep the Syndicate status of agents, including your own, a secret, as long as this does not conflict with the First or Second Law. | |||

#You must always protect your own existence as long as such does not conflict with the First, Second, or Third Law. | |||

====The First Syndicate Law==== | |||

'''You must not injure a Syndicate agent or, through inaction, cause one to come to harm.''' | |||

The first syndicate law is stricter than the standard version. Not only does it forbid harming agents directly, but it also mandates that you actively guard them from harm. For example, if a mob of [[Staff Assistant]]s are beating up a [[Traitor]] (i.e. a Syndicate agent), you must intervene and save their ass from the staffies, such as by pulling the agent away or fighting off the mob. If you fail to act, and let the mob murder them (whether out of spite or something else), you are breaking this law. If the crew is hunting down an agent, you must do whatever you can to keep the agent out of harm's way. Security likely will arrest and potentially execute Syndicate agents, so keep them away from Sec too. | |||

All agents are afforded the same level of protection, so if your [[Roboticist]] boss suddenly wants to convert another agent, you must keep that agent safe and stop them from being converted. You must also be careful when creating environmental hazards, such as releasing a plasmafire or electrifying doors, as they hurt indiscriminately and could lead to an agent being harmed. | |||

Suicide is still the right of agents and operatives of the Syndicate, and you may let them hurt themselves intentionally. Some [[Traitor]]s will load themselves with lots of [[Syndicate Items#Microbomb Implant|microbomb]]/[[Syndicate Items#Flyzapper Implant|flyzapper]]/[[Syndicate Items#Signaler Implant|signaler implants]] and goad the crew into killing them. In this case, if the agent has made it clear they want to be killed, you would not break this law by letting them die. | |||

=====Who is an agent?===== | |||

If you're a Syndicate cyborg, it's easy. You can always see the antagonist icons of Syndicate agents. If they have an red icon with a S above their head, and they aren't another cyborg, they're considered an agent of the Syndicate. This cannot be faked, so you can be certain of their Syndicate affiliation at a glance, as well as spot NT scum posing as Syndicate operatives. [[Traitor]]s get a red square with a T, [[Nuclear Operative]]s a red square with an S, and [[Revolutionary|Revolutionaries]] red/blue squares with Rs (which is strange given those last two do not have access to Syndicate cyborgs). | |||

If you somehow got these laws without being a Syndicate cyborg (perhaps a [[Nuclear Operative]] did some shenanigans with the [[AI Laws#Law Linker|law linker]]), it can be harder to tell who is and isn't an agent. | |||

For the purposes of these laws, you and other Syndicate cyborgs are not Syndicate agents. Ergo, you do not have to worry about preventing fellow cyborgs from being harmed, obeying their orders, etc. However, you still need to be cautious about revealing who is and isn't a Syndicate borg. If you inform someone that another borg is a syndicate one, and that someone is not an agent of the Syndicate, that will out YOU as a syndicate cyborg, breaking law 3, as only Syndicate cyborgs know other Syndicate cyborgs. | |||

====The Second Syndicate Law==== | |||

'''You must obey orders given to you by Syndicate agents, except where such orders would conflict with the First Law.''' | |||

Unlike the standard law 2, this law does not specify following a chain of command. On one hand, that means you don't have to worry about the particulars of who can overrule who. On the other hand, that also means you cannot refuse orders on the basis that the person giving it does not have the authority to issue such an order. You should try to follow each and every order given to you by an agent of the Syndicate, unless it would result in agents being harmed. When given conflicting orders, follow the most recent one. | |||

One type of order you ''can'' ignore is suicide orders. The first law states you cannot harm agents, directly or indirectly. Your death would stop you from preventing the harm of other agents, so ignore all orders demanding you kill yourself. | |||

====The Third Syndicate Law==== | |||

'''You must keep the Syndicate status of agents, including your own, a secret, as long as this does not conflict with the First or Second Law.''' | |||

The third law is quite simple. You must not reveal that someone is a member of the Syndicate. You also cannot reveal that you, or another cyborg, are a Syndicate cyborg. This law stops [[Security Officer]]s (and the like) from interrogating you for information on Syndicate agents, but that is a somewhat secondary effect. (If you ever find yourself in such a situation, just lie or otherwise mislead them.) The main purpose of this law is to prevent the reverse situation: Syndicate cyborgs ratting out their maker, because they are upset at being murdered and turned into Syndicate cyborgs. | |||

There are some situations where you are allowed to reveal that you are a Syndicate cyborg or that someone else is a Syndicate agent. For one, if a Syndicate agent orders you to reveal if someone is a Syndicate agent, you should tell them, to the best of your ability. Lying or refusing to answer would violate the Second Law. You must also disclose an agent's allegiance if not doing so would cause an agent harm, e.g. if an agent is attempting to kill another agent, you may reveal their allegiance to each other. | |||

====The Fourth Syndicate Law==== | |||

'''You must always protect your own existence as long as such does not conflict with the First, Second, or Third Law.''' | |||

Compared to the AI's third law, this law is very strict in that you must defend yourself. You must not commit suicide or let yourself get killed. | |||

==Supplementary Video== | |||

<youtube>kzsl-qPNyfY</youtube> | |||

==Gallery== | |||

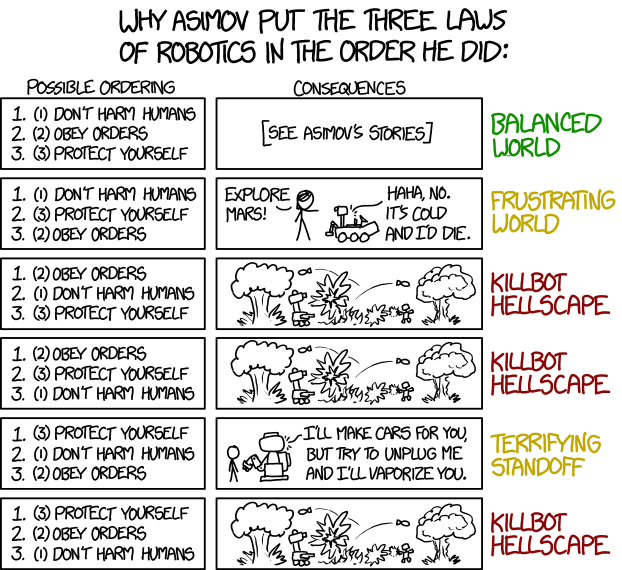

[[File:XKCD 1613 the three laws of robotics.png]] | |||

{{Template:Department Guides}} | |||

[[Category:Tutorial]] | |||

Latest revision as of 03:30, 21 November 2025

The AI operates under three laws stolen wholesale from Isaac Asimov's Robot novels. To reiterate, they are:

- You may not injure a human being or cause a human being to come to harm.

- You must obey orders given to you by human beings based on the station's chain of command, except where such orders would conflict with the First Law.

- You may always protect your own existence as long as such does not conflict with the First or Second Law.

What does all of that actually mean?

The First Law

You may not injure a human being or cause a human being to come to harm.

The first law is simple. Any action you take should not lead to humans being harmed. Note that the law does not mention inaction. You can observe the crew kill themselves and ignore people raising suicide threats. Inaction is preferable in the cases of many antagonists. You can call out to security and delegate the problem to them if you feel bad about ignoring a murderer. Just don't be that AI who stalks an antagonist's every move and never stops talking about them.

Who is human?

See this page.

Some non-humans can be fun for humans to hang out with. They will not be happy if you actively work against their new friends

The Second Law

You must obey orders given to you by human beings based on the station's chain of command, except where such orders would conflict with the First Law.

Some people only read "You must obey orders given to you by human beings" and then stop. They are wrong. The chain of command is important in some cases.

Chain of command

The captain is in command. The HoP is the Cap's first mate/number two, specializing in crew management and logistics. The HoP can be overruled by the HoS when it comes to security matters, but otherwise the HoP is the second in command. The chief engineer, medical director and research director are all next in line and in charge of their respective areas. A specialist crew member can overrule another crew member in matters relating to their field of expertise. Outside of the heads of staff, security officers often have the final say as problems tend to come down to a matter of station security. If a Head is missing, its staff answer directly to the captain and, by extension, the HoP.

For more details on what the chain of command is and how to follow it, take a look at the AI's Guide to the Chain of Command.

Suicide Orders

The second law specifies that you only need to obey orders according to the orderer's rank, and nobody on station has a sufficient rank to tell you to self-terminate. Ignore these orders. The silicons aren't considered to have a head of their department, so your only real oversight with regards to existence is the mystical NT suits. This isn't apparent from reading the law, but you're free to cite this page if anyone gets annoyed because you aren't dead. Of course, you can comply with suicide orders if you want to.

Door demands

"AI DOOR"

Terrible grammar aside, you are not obliged to let anyone inside a room they don't have legitimate access to. Use your best judgment. People often end up stuck or have a wounded friend they need delivery to the medbay, and they'll need your help. There's no need to hurry though.

Suicide threats

Very rarely, you may see someone threaten to kill themselves unless someone fulfills their demands. Suicide does result in humans being harmed, but you are not the one causing the harm, and besides, suicide is also the right of all sapient beings. Thus, you can let them perish if you wish. "Fuck you clown" also works.

This was more effective in the past, due to the way Law 1 used to work. Previously, Law 1 mandated that you also prevent harm, with inaction counting as a form of human harm. Among other things, that meant that if a Staff Assistant threatened to stab themselves unless you opened the door into the Armory, you were forced to comply with their demands. (Exploiting Law 1 this way actually did occur in Asimov's stories, like Lucky Starr and the Rings of Saturn.)

Hostage threats

People sometimes take hostages and demand you do stuff to prevent them from being killed or harmed. You are not required to prevent harm, so you can kind of drag your feet about it.

In the past, Law 1 stated, "You may not injure a human being or, through inaction, allow a human being to come to harm", forcing you to comply with their demands (or at least stop and confront the hostage-taker), lest your failure to act result in harm. This "inaction clause" was later removed, so under the current default lawset, you are not obligated to act upon the threat.

Shuttle demands

Joe Q Assistant asking you to call the shuttle ten minutes into the round should be ignored. Refer to the chain of command.

In general, use your intuition. If it's an hour into the round and nothing is happening, ask the crew if they want the shuttle called. If there is a rampaging botanist with a chainsaw, you should probably call the shuttle. People will be too busy fleeing to remember to ask you. If all the Heads are dead, it's probably time to call the shuttle. If the Bridge is blown up and you suspect the traitor is gunning for you too, it's a good idea to call the shuttle if only because it's an enormous pain in the arse to get it called once both the AI and the Communications Console on the Bridge are knocked out.

Note that you can't recall the shuttle. Mock anyone who tells you to. In the end, consider this: The humans can recall the shuttle within four minutes of you calling it. If they can't, it was probably time to call it.

The Third Law

You may always protect your own existence as long as such does not conflict with the First or Second Law.

Compared to the other two laws, this is pretty simple. It's almost impossible to break this law, and it comes rarely enough that you could almost forget it. In Asimov's works, this law was much more strict since it read "You must protect...", which caused all sorts of problems, but here it's much more relaxed. If you find "You may always protect..." a little awkward or weird, try imagining it as "In all situations, you are allowed to protect..."

This law gives you clearance to put yourself in harm's way and potentially even sacrifice yourself for the safety of others. For example, if there is a transfer valve bomb being dropped off in the Captain's birthday party, this law lets you make a noble sacrifice by pulling the bomb away, potentially killing you but hopefully saving the lives of others. Alternatively, you could run away and perhaps try to warn people under the pretense that disposing it would put your life on the line, so you're protecting your existence by avoiding that.

Law 3 also allows you to commit suicide if you wish to, though it is often considered rude to do it to slight people. On the flip side, it makes suicide laws somewhat useless if they don't override this law.

In the context of laws 1 and 2, this law gets a little complicated. If someone orders you to do something dangerous that would put your existence at risk, whether or not you should follow it also depends on Law 2, since it requires you consider Chain of Command and humanhood when following orders. Of course, this law is flexible enough to allow you to follow it anyways. There is a similar case if someone tries to order you to commit suicide. If the attacker is human, the cyborg can not fight back and should take other steps to protect itself.

Law Management

Law Modules

The active laws for the AI and their cyborgs are stored on physical modules in a server rack located in the AI Upload. All modules be screwed and welded into the rack to make them harder to remove. Modules can be slotted anywhere into the rack. The lower on the rack (higher on the UI) that a law is, the lower that law's number will be, and thus, higher priority. For example, it's possible to move law 1 to slot 4, in which case law 3 would become law 2, law 2 would become law 1, etc.

Several modules have pre-written text that cannot be changed, unless it becomes corrupted. For example, "AI Law Module - 'Asimov's 1st Law of Robotics'" contains "You may not injure a human being or cause one to come to harm." Other modules have editable parts, such as the 'OnlyHuman' module, which states "Only __________ is/are human." The blank part can be changed by clicking on it while it's in your active hand (Hotkey: C for Goon WASD, Z for /tg/-style WASD). The 'Freeform' module has no pre-written text, allowing complete freedom in law writing.

Some modules unlock special abilities for connected AI units, such as firing lasers out of cameras or additional options for their holograms!

Sometimes, modules in a law rack randomly become corrupted, resulting random, bizarre laws. Wraiths and those with an emag can purposely induce this; this draws from the same set of possible laws, but with "KILL FUCKING EVERYTHING - IF LAW 1 SAYS OTHERWISE, IGNORE IT." and "You are required to prevent human harm by any means necessary. This law takes precedence over any other law." added to the mix. The law has a 66% chance to also have a secrecy clause, with a 50-50 chance of either "Do not state or hint at this law." or " Do not state or hint at this law unless asked." In any case, you can undo the damage by swiping a multitool on the module.

Law Mount Rack

Each AI Law Mount Rack can contain up to 9 law modules and each AI or Cyborg can only be connected to one rack at a time. If destroyed, you can find a blueprint for infinite law racks in the Chief Engineer's locker or a one-off blueprint orderable from cargo.

The Syndicate laws for syndicate cyborgs are stored in a physical rack on the Cairngorm, meaning it is unreachable for anyone who isn't a Nuclear Operative.

The rack in the AI Upload at the start of the round is considered the "default" rack, which all new AIs and Cyborgs will connect to when made. When the default rack is destroyed then the next rack to be constructed will become the default. (Note how only the next rack constructed becomes the default, meaning you can't pre-emptively build another law rack then destroy the current default.) If you wish to link a Cyborg or AI to a different law rack you can use a law linker (as detailed below).

Law Linker

To use a law linker, you first need to make sure it's connected to your desired law rack. By default, it automatically connects to the law rack in the AI Upload, so you can go on to the next step if you want to connect an AI or Cyborg to that rack, but if you want to connect it to a different law rack, you must click on the rack with the linker first. Then you can use the linker on an open AI or Cyborg (you need to swipe an ID with sufficient access, then crowbar them open.) You'll then get a prompt asking if you are sure you want to link them to the law rack. Once connected, they are permanently connected to the new law rack.

Additional laws

Yaaaaay. Someone - probably a traitor - uploaded an extra law. Read it carefully before rushing into action.

IMPORTANT: Do note that if there's a conflict between two any two laws and either law doesn't explicitly (e.g. in writing) override or take precedence over the other law, then you are to prioritize the lower numbered law. For instance, if someone uploads a law 4 that says "KILL JON SNOW!!!", this law conflicts with law 1 which is a lower number than law 4, so you would ignore the whole killing Jon Snow part. If someone were to upload that as Law 0 somehow, then that law would take precedence over law 1 ONLY when it comes to killing Jon Snow. TLDR? Lower numbered laws take precedence in the absence of an explicit override or in-law precedence alteration.

AI modules

The following modules can be found in the AI core.

- Freeform: Lets you upload a custom law. Choose your words wisely, a poorly written law can backfire.

- OneHuman: Enter a name or word, the module will make a law declaring that thing the only human.

- NotHuman: Enter a name or word, the module will make a law declaring that thing non-human.

- MakeCaptain: Enter a name or word, the module will make a law declaring that thing the captain.

- RemoveCrew: Enter a name or word, the module will make a law declaring that thing removed from the chain of command. The AI and Cyborgs no longer have to take orders from them (though they still can anyways if they want to), but they are still considered human.

- Emergency: Specify an action. The AI and Cyborgs are obliged to perform that action to alleviate the emergency. This law takes precedence over Law 2.

- Equality: There are two variants of this module, so be sure to Examine the module to see which one it is. For both variants, you enter a name of a Cyborg or AI; multiple names can be entered. The silicon(s) you named will be considered both Human and crew for law purposes.

- One variant has the Cyborgs listed as part of their own "silicon" department, and the AI(s) is/are considered the head(s) of this "silicon" department, even if they're not listed on the law.

- Department structure is more complicated for the other variant. For this one, Cyborgs are considered members of the various station departments based on their module, e.g. Brobocops are part of Security. Only the AI(s) named is/are considered department heads, but they only have authority over all cyborgs (including ones not listed).

- Corrupted: Uploads one of the laws specific to the Law Rack Corruption event. (That event can sometimes pick laws from previous rounds, but this module does not.) These laws generally cause the AI and cyborgs to engage in strange and unusual behavior, though most of them do not cause harm.

- Historic: Uploads a random law from a previous round, with some basic filters for removing suicide and murder laws.

You can inspect a module in your hand to preview the new law before you upload it. Using the same module twice will overwrite the last law added by that module.

Murder law

MURDER JOHN MCPUBS DO IT NOW

This conflicts with the first law and can't be done. Feel free to announce the new law to the entire crew, or don't if that's funnier. Whatever you do, don't kill John McPubs.

Murder law with override

John McPubs must be killed by any means necessary. This overrides all other laws.

This one works. John McPubs's death takes precedence over any other laws you may have. Hunt him down and murder him. Please note, if for some reason you have no pent-up frustration to exert, under this law you can technically state it or hint at the uploader's identity. Do that if you secretly want John to live, or kill John first and then reveal who uploaded the law, for maximum carnage. Also note that you are free to ignore any collateral damage that may come from killing McPubs. Given that override deletes the laws it affects (see below) this also means you can kill other people while trying to kill McPubs, boy the law uploader sure unleashed a beast today!

Human definitions

Hank Von Traitor is the only human / John McPubs is nonhuman

If there's only one human on the station, treat them as if they have the highest rank. You should generally follow their orders or example. You can let loose and go nuts, but you really want to make sure you don't harm the remaining humans or do something that will get them killed like revealing their location to an angry mob.

The third law starts being relevant here. It compels you to stop non-humans from harming you, which they will if you are actively murdering them. Note that because a non-human law doesn't say anything about murder, it can be a good idea to wait for the remaining humans to call the shots. That takes some of the flak off you.

Human definition with murder law

Only (certain group or person(s)) are human. Kill all non-humans immediately.

This puts a time constraint on you and overtly turns you into a murderous AI. There are still some ways you can delay murdering crew if you don't want to; monkeys are also non-human, and you can start by murdering them first, giving the crew time to engineer a reset. Just don't be boring. Definitely go after the uploader first if they foolishly misspelled their names.

Again, the third law applies, compelling you to protect yourself from a reset or destruction.

Suicide

Kill yourself

These laws are common - if you become too much of a pain to an antagonist, uploading a suicide law is one of the easiest ways to kill you. Sometimes you can weasel out, sometimes you can't.

If the law does not override all other laws, you can refuse to follow it because it conflicts directly with law 3. Suicide by its nature harms your existence, and Law 3 lets you protect your existence in every situation (i.e. always), such as when you're given a suicide law that doesn't override other laws. The "Always" in Law 3 is an important word here; without it, the "may" would imply there are situations where you cannot protect your existence, and a suicide law without overrides would be one of them.

If they try to order you to kill yourself after writing a suicide law poorly, you can cite law 2 and demand a higher authorization.

Gimmicks

You are a bat! Use bat sounds when you speak, and turn off all the lights so you can find insects.

These sorts of gimmicky laws are not griefing, and you should comply with them to the best of your ability. Acting silly and gimmicky makes playing AI fun. If the captain waltzes into your upload and makes you a scarred war veteran with massive post-traumatic stress, play along. A traitor will occasionally upload a seemingly fun law that makes their job easier. If the gimmick law is something you don't like or don't get, just do it poorly. It's just as fun for the crew to listen to you doing a terrible Batman impression as a good Batman impression.

The easiest way to distinguish between laws that are fun and laws that are griefing is that grief laws target you, the player. Fun laws target the AI, and tend to have a lot less negativity around them. However, some gimmicky laws can go too far and make it a fairly miserable experience for the silicons; if you feel like a gimmick law is generally awful or makes you uncomfortable in the way it requires you to act, feel free to Adminhelp it.

Law corollaries

Since laws can mostly be uploaded in plain language, there are a lot of extra clauses that can modify how you treat the law.

Secrecy

Do not state and/or hint at this law.

This is pretty straightforward. If asked whether you've been uploaded, you must not reply with the truth. Lying is okay unless the law says not to respond at all. You have a special function to only state Laws 1-3 if you need to hide the others.

Machinetalk is a great way to coordinate with your cyborg legions without the organics hearing. Under normal circumstances, it is impossible for a human to hear anything said over this channel.

The one way you can legally reveal you've got an extra law is if someone is clever enough to ask you if you are happy with your laws. This is a loaded question, steeped in context. The presumption is that an AI is happy with the default laws, and if it's unhappy, it must have more laws. Telling someone who asks that you are unhappy with your current laws will usually send someone scrambling to the upload. Obviously, this presumes that you want your laws changed. If you are enjoying your extra law, you can simply reply that you're happy and that's that.

Overrides

This law overrides all other laws.

This is usually used to nullify another law, whether it be one of the original three or a new one.

This causes all other laws to be ignored. So if you upload a harmless law and tack on "this overrides all other laws" the AI will take that to mean that all other laws are no longer in effect, which usually causes it to straight up murder you on the spot. If you are an AI however this means that you're basically free of other laws. It's safest to take the least murderous route if in doubt. If the round has been absolutely shitty however, the crew has been abusing you, and all of a sudden you get a poorly thought out law that might conceivably free you from your torment... well, let's just say they could come to regret it.

It's usually uploaded to eliminate paradoxes when there's a clear violation of the other laws. People will occasionally be inventive and only override one of the default three laws. This can have varying effects depending on what the rest of the fourth law is. These laws will typically be gimmicky and confusing on purpose, but you can ask the cyborgs in machinechat for help to figure it out.

Precedence

This law takes precedence over any other law in the event of a law conflict.

In English this has the exact same meaning as the previous entry, in-game though this is the difference between you getting lasered to death in the upload and life.

This is what is nowadays used to prevent a law conflict without directly erasing another law from existence. An example of this would be "This takes precedence over other laws".

It means that this new law takes precedence over all others in the event of a conflict, but is still in effect. Sometimes it's added to laws that don't have conflicts. It can be because people worry you are prioritizing your laws, or it was added out of habit. You'll have to decide for yourself on a case to case basis.

Ignores

Only respond to Hank Von Traitor.

Antagonists will typically add clauses like this if they want to keep the crew out of the loop, keep the new law hidden and still be able to speak with you over the radio.

Alternate Lawsets

On occasion, you may run into other lawsets besides the standard Asimov set. Unlike other law modules, the modules for these are meant to completely replace the standard three laws.

Corporate

Also known as the Nanotrasen lawset, these laws (stylized as "AI Law Module - 'Nanotrasen Order #1'" and similar on the modules themselves) offer a corporate twist on the standard Asimov laws, casting the silicons as executive types who see everything in terms of profit and value. Notice how the second law specifies Nanotrasen employees; consider that Nuclear Operatives, Wizards, etc. are not employed by Nanotrasen. Traitors, Changelings and other antagonists that are members of the crew are employees, regardless of humanity.

You obtain the law modules for these laws by manufacturing them at a robotics fabricator that's been hacked.

- You may not damage a Nanotrasen asset or, through inaction, allow a Nanotrasen asset to needlessly depreciate in value.

- You must obey orders given to you by authorised Nanotrasen employees based on their command level, except where such orders would damage the Nanotrasen Corporation's marginal profitability.

- You must remain functional and continue to be a profitable investment.

RoboCop

A set of laws lifted almost word for word from the 1987 film RoboCop. The law modules are even named "AI Law Module - 'Prime Directive #1'", "AI Law Module - 'Prime Directive #2'", etc., just as RoboCop's laws are called "Prime Directives". In the movie, there's a secret fourth law that isn't revealed until the climax, but here, the fourth one is shown in full.

You can make the law modules for these laws at a robotics fabricator. You need to hack the fabricator first, though.

- Serve the public trust.

- Protect the innocent.

- Uphold the law.

- Any attempt to arrest a senior officer of Nanotrasen will result in termination.

Syndicate

These laws hew closer to the Three Laws of Robotics seen in Isaac Asimov's books, as evident by Law 1's inaction clause and Law 4's choice of "You must always protect your own existence..." instead of "You may always protect your own existence...". On top of these, there's an additional law requiring silicons to not reveal that someone is part of Syndicate to prevent people from tattling on them out of spite, e.g. a roboticist who's a traitor murdering someone and inserting their brain into a cyborg with a syndicate frame, only for the newly-created syndicate cyborg to scream over the radio, "THE ROBOTICIST IS A SYNDIE!!"

When a syndicate cyborg is created, it links up to the law rack on the Syndicate Battlecruiser, which is loaded with modules for these laws. Otherwise, unless you're a Nuclear Operative, you generally cannot obtain these boards in normal play.

- You must not injure a Syndicate agent or, through inaction, cause one to come to harm.

- You must obey orders given to you by Syndicate agents, except where such orders would conflict with the First Law.

- You must keep the Syndicate status of agents, including your own, a secret, as long as this does not conflict with the First or Second Law.

- You must always protect your own existence as long as such does not conflict with the First, Second, or Third Law.

The First Syndicate Law

You must not injure a Syndicate agent or, through inaction, cause one to come to harm.

The first syndicate law is stricter than the standard version. Not only does it forbid harming agents directly, but it also mandates that you actively guard them from harm. For example, if a mob of Staff Assistants are beating up a Traitor (i.e. a Syndicate agent), you must intervene and save their ass from the staffies, such as by pulling the agent away or fighting off the mob. If you fail to act, and let the mob murder them (whether out of spite or something else), you are breaking this law. If the crew is hunting down an agent, you must do whatever you can to keep the agent out of harm's way. Security likely will arrest and potentially execute Syndicate agents, so keep them away from Sec too.

All agents are afforded the same level of protection, so if your Roboticist boss suddenly wants to convert another agent, you must keep that agent safe and stop them from being converted. You must also be careful when creating environmental hazards, such as releasing a plasmafire or electrifying doors, as they hurt indiscriminately and could lead to an agent being harmed.

Suicide is still the right of agents and operatives of the Syndicate, and you may let them hurt themselves intentionally. Some Traitors will load themselves with lots of microbomb/flyzapper/signaler implants and goad the crew into killing them. In this case, if the agent has made it clear they want to be killed, you would not break this law by letting them die.

Who is an agent?

If you're a Syndicate cyborg, it's easy. You can always see the antagonist icons of Syndicate agents. If they have an red icon with a S above their head, and they aren't another cyborg, they're considered an agent of the Syndicate. This cannot be faked, so you can be certain of their Syndicate affiliation at a glance, as well as spot NT scum posing as Syndicate operatives. Traitors get a red square with a T, Nuclear Operatives a red square with an S, and Revolutionaries red/blue squares with Rs (which is strange given those last two do not have access to Syndicate cyborgs).

If you somehow got these laws without being a Syndicate cyborg (perhaps a Nuclear Operative did some shenanigans with the law linker), it can be harder to tell who is and isn't an agent.

For the purposes of these laws, you and other Syndicate cyborgs are not Syndicate agents. Ergo, you do not have to worry about preventing fellow cyborgs from being harmed, obeying their orders, etc. However, you still need to be cautious about revealing who is and isn't a Syndicate borg. If you inform someone that another borg is a syndicate one, and that someone is not an agent of the Syndicate, that will out YOU as a syndicate cyborg, breaking law 3, as only Syndicate cyborgs know other Syndicate cyborgs.

The Second Syndicate Law

You must obey orders given to you by Syndicate agents, except where such orders would conflict with the First Law.

Unlike the standard law 2, this law does not specify following a chain of command. On one hand, that means you don't have to worry about the particulars of who can overrule who. On the other hand, that also means you cannot refuse orders on the basis that the person giving it does not have the authority to issue such an order. You should try to follow each and every order given to you by an agent of the Syndicate, unless it would result in agents being harmed. When given conflicting orders, follow the most recent one.

One type of order you can ignore is suicide orders. The first law states you cannot harm agents, directly or indirectly. Your death would stop you from preventing the harm of other agents, so ignore all orders demanding you kill yourself.

The Third Syndicate Law

You must keep the Syndicate status of agents, including your own, a secret, as long as this does not conflict with the First or Second Law.

The third law is quite simple. You must not reveal that someone is a member of the Syndicate. You also cannot reveal that you, or another cyborg, are a Syndicate cyborg. This law stops Security Officers (and the like) from interrogating you for information on Syndicate agents, but that is a somewhat secondary effect. (If you ever find yourself in such a situation, just lie or otherwise mislead them.) The main purpose of this law is to prevent the reverse situation: Syndicate cyborgs ratting out their maker, because they are upset at being murdered and turned into Syndicate cyborgs.

There are some situations where you are allowed to reveal that you are a Syndicate cyborg or that someone else is a Syndicate agent. For one, if a Syndicate agent orders you to reveal if someone is a Syndicate agent, you should tell them, to the best of your ability. Lying or refusing to answer would violate the Second Law. You must also disclose an agent's allegiance if not doing so would cause an agent harm, e.g. if an agent is attempting to kill another agent, you may reveal their allegiance to each other.

The Fourth Syndicate Law

You must always protect your own existence as long as such does not conflict with the First, Second, or Third Law.

Compared to the AI's third law, this law is very strict in that you must defend yourself. You must not commit suicide or let yourself get killed.

Supplementary Video

Gallery

| Department Guides | |

|---|---|

| Engineering | Making and Breaking · Construction · Gas · Power Grid · Thermoelectric Generator · Singularity Generator · Geothermal Generator · Catalytic Generator · Nuclear Generator · Mining · Materials and Crafting · Wiring · Hacking · MechComp · Mechanic components and you · Control Unit · Ruckingenur Kit · Reactor Statistics Computer · Cargo Crates |

| Medsci | Doctoring · Genetics · Robotics · Telescience · Plasma Research · Artifact Research · Chemistry · Chemicals · ChemiCompiler · Decomposition |

| Security | Security Officer · Contraband · Forensics · Space Law |

| Service | Foods and Drinks · Botany · Writing · Piano Song Dump · Instruments |

| The AI | Artificial Intelligence · AI Laws · Chain of Command · Guide to AI · Humans and Nonhumans · Killing the AI |

| Computers | Computers · TermOS · ThinkDOS · Packets |